Today's mission-critical storage environments require greater consistency, predictability, and performance to meet demanding business demands.

Data centers require more I/O capacity to accommodate massive amounts of data, apps, and workloads.

As this data explosion continues, so do our overall expectations for its availability.

Users now expect to be able to use and access applications anytime, anywhere, on any device.

To meet these dynamic and growing business demands, organizations need to rapidly deploy and scale applications.

As a result, many enterprises are moving to environments with more virtual machines (VMs) and deploying flash storage to rapidly deploy new applications and scale applications to support thousands of users.

Conventional IEEE 802.3 Ethernet technology can lose data transmitted over the network between the Initiator and Target to ensure reliable data transmission, which can negatively impact the performance of applications sensitive to data loss.

Storage performance is particularly sensitive to packet loss.

Because of this, TCP guarantees data delivery at the transport layer by sequencing data segments and performing retransmissions when loss occurs.

However, having to perform TCP retransmissions for storage areas significantly degrades the performance of applications that rely on that storage.

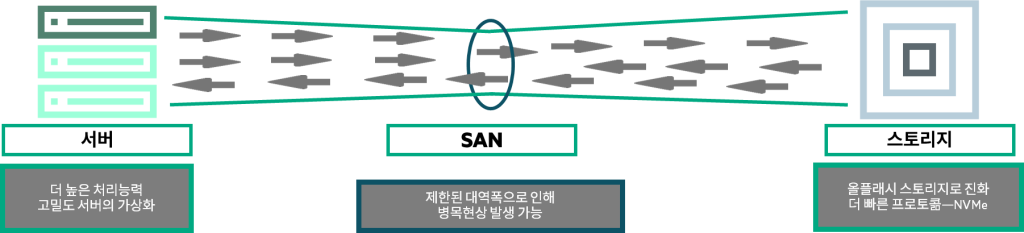

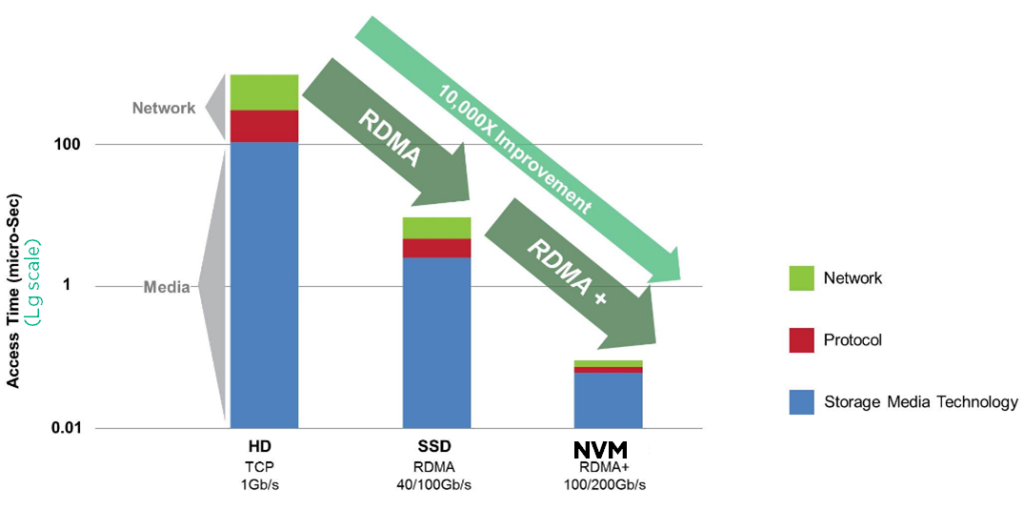

Recent advancements in next-generation servers, the rise of server virtualization, the introduction of flash-based storage, and new storage technologies like NVMe have enabled read/write storage that surpasses the performance of existing storage networking protocols like Fiber Channel (FC). The performance bottleneck in SANs has now shifted from the storage media to the network.

To increase agility, reduce costs, and fully leverage the benefits of flash-based architectures, you need a network that can deliver the performance required by today's server and storage environments.

What is RoCE (RDMA over Converged Ethernet)?

RoCE is a network protocol that allows RDMA over Ethernet networks. RDMA allows data to be moved between servers with minimal CPU intervention.

RoCE combines the benefits of Ethernet and Data Center Bridging (DCB) with RDMA technology to reduce CPU overhead and improve the performance of enterprise data center applications.

With the rise of AI, machine learning, and high-performance computing (HPC) applications, RoCE is designed to support increasingly data-intensive applications, ensuring higher performance and lower latency.

RoCE is designed to deliver superior performance within advanced data center architectures by integrating compute, network, and storage into a single fabric. Converged solutions like RoCE are gaining popularity in modern data centers because they handle both lossless and lossy traffic.

Solutions like Server Message Block Direct are driving the growth of hyper-convergence, IP storage, and RoCE-based solutions.

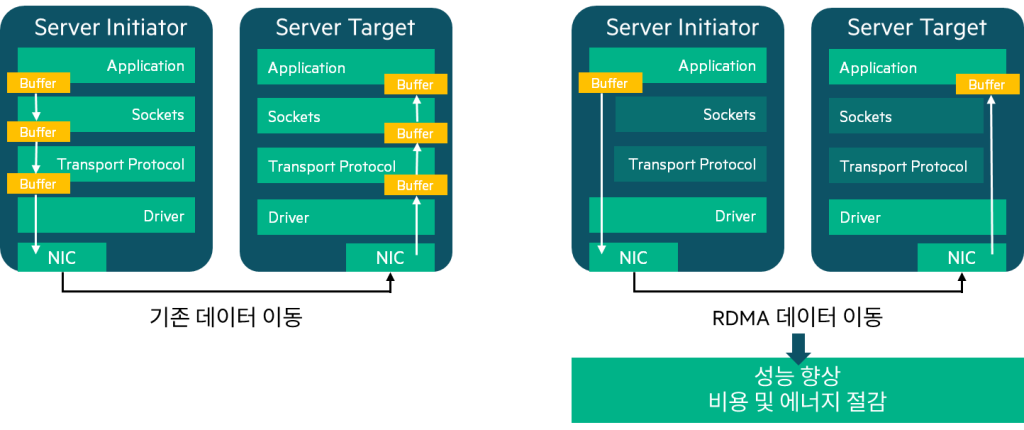

RDMA (Remote Direct Memory Access)

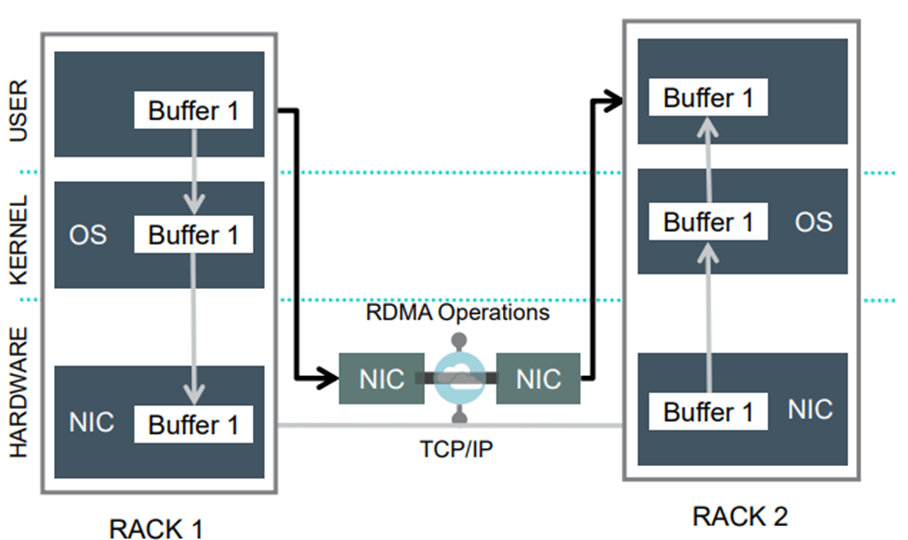

RDMA is a technology that provides direct memory access.

RDMA facilitates data transfer between the working memory of two systems without burdening the CPUs of both systems.

Without RDMA, applications would have to rely on the OS to move data through the stack and over the wire in virtual buffer space.

The recipient's OS must retrieve the data and place it directly into the application's virtual buffer space. The CPU is typically occupied for the entire duration of the read or write operation, and is unable to perform other tasks.

On the other hand, when applications use RDMA, data movement is handled by RDMA-capable NICs, reducing CPU overhead for these operations. This frees up server CPU resources for other activities without compromising I/O performance.

Additionally, RDMA ensures reduced latency, improved resource utilization, flexible resource allocation, fabric integration and scalability, and increases server productivity, reducing the need for additional servers and lowering total cost of ownership.

RDMA requires a reliable, low-latency network infrastructure to meet the data rates of today's applications.

There are several ways to meet the requirements of RDMA, and InfiniBand is a representative solution.

RoCE topology

RoCE leverages the capabilities of RDMA to provide fast and rapid communication between applications running on servers and storage arrays.

RDMA was first used in InfiniBand (IB) fabrics. RoCE is primarily built by replacing the IB link layer with an Ethernet link layer to transport data. Now, data centers can leverage the benefits of RDMA using a converged, high-performance infrastructure that supports TCP/IP.

RoCE is divided into two versions.

- RoCEv1: An Ethernet link layer protocol that allows two hosts in the same Ethernet broadcast domain to communicate.

- RoCEv2: Allows routing of traffic by replacing the IB network layer with standard IP and UDP headers.

Since packet encapsulation includes IP and UDP headers, RoCE v2 can be used in both L2/L3 networks to increase scalability.

lossless network

A lossless network is a network in which the devices that make up the network fabric are configured to prevent packet loss using the DCB protocol.

One of the primary goals of designing a network solution that integrates RoCE is to deploy a lossless fabric.

Although the RoCE standard does not require a lossless network, RoCE performance may degrade if a lossless network is not provided.

Given this, it is recommended to consider lossless fabric as a requirement for RoCE implementation.

Lossless fabrics can be built on Ethernet fabrics by leveraging the DCB protocol.

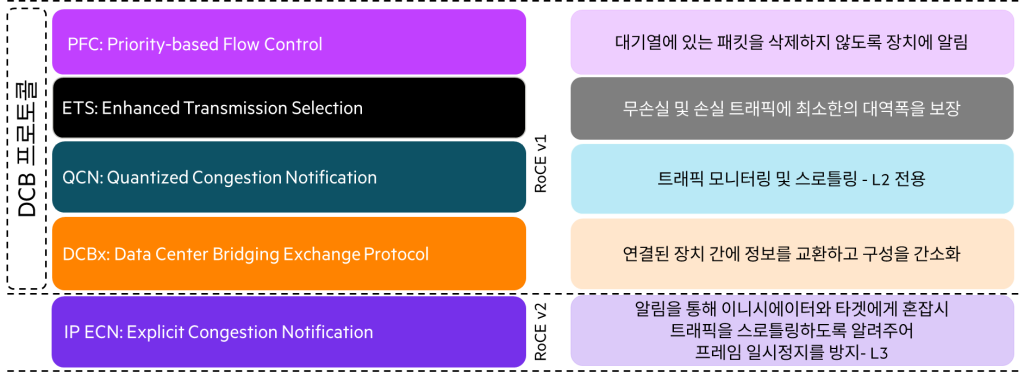

DCB (Data Center Bridging) protocol

The following DCB protocols are utilized to build a lossless fabric on an Ethernet fabric:.

- Priority-based Flow Control (PFC)

- Enhanced Transmission Selection (ETS)

- Data Center Bridging Exchange (DCBx)

- Quantized Congestion Notification (QCN)

- IP Explicit Congestion Notification (ECN)

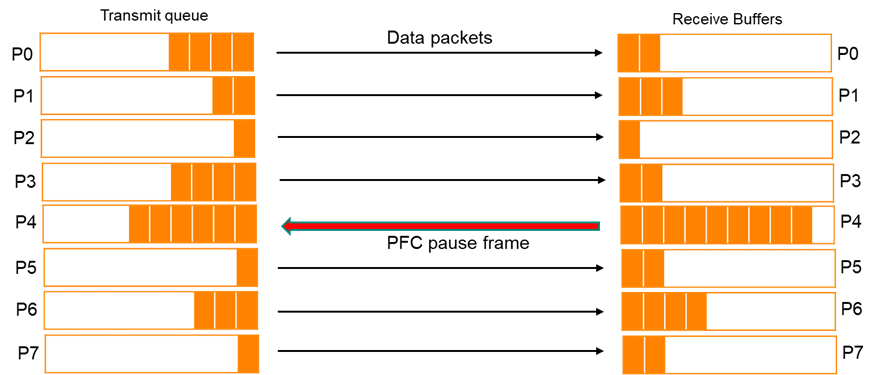

Priority-based Flow Control

IEEE Standard 802.1Qbb is a link-level flow control mechanism.

This flow control mechanism is similar to that used in IEEE 802.3x Ethernet PAUSE, but operates on an individual priority basis.

Instead of pausing all traffic on a link, PFC allows you to selectively pause traffic based on its class.

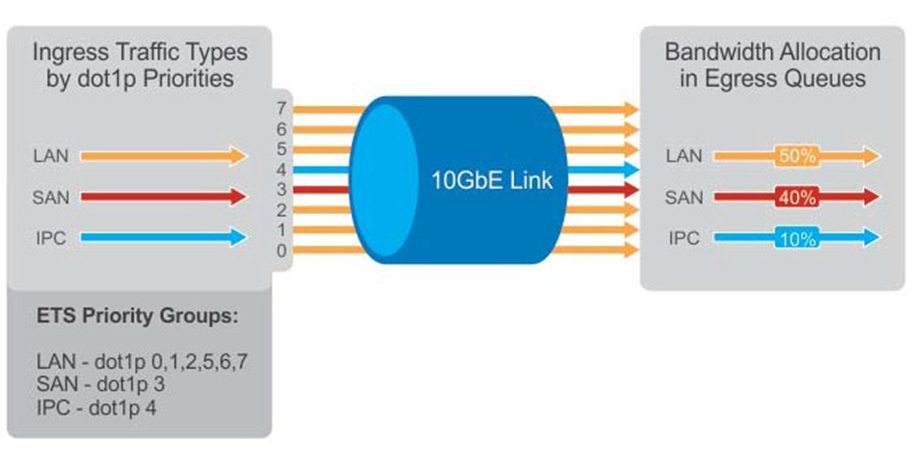

Enhanced Transmission Selection

ETS provides configurable bandwidth guarantees for all queues.

For PFC queues, a certain percentage of the link bandwidth is guaranteed to prevent lossless flows from being depleted by flows in other queues.

This configuration ensures that each traffic queue gets a minimum amount of bandwidth.

Data Center Bridging Exchange

The DCBx protocol is a discovery and exchange protocol for communicating configuration and capabilities between peers to ensure consistent configuration across a data center bridging network. It enables the automatic exchange of Ethernet parameters and discovery capabilities between switches and endpoints.

This helps ensure consistent configuration across the network.

Quantized Congestion Notification

The QCN protocol provides a means for switches to notify sources that there is congestion on the network, allowing the sources to reduce their traffic flow.

This will reduce the need for pauses while maintaining the flow of critical traffic.

This feature is only supported in pure L2 environments and is rare now that RoCE v2 has become the mainstream RoCE solution.

HPE Aruba Networking CX switches with DCB-based solutions QCN is not supported.

IP Explicit Congestion Notification

Although IP ECN is not officially part of the DCB protocol suite, RoCEv2 supports ECN and sends Congestion Notification Packets (CNPs) to endpoints when congestion is signaled via the IP ECN bits in traffic originating from that endpoint.

For ECN to work properly, ECN must be enabled on both endpoints and on all intermediate devices between the endpoints.

To reduce packet loss and latency, ECN notifies end nodes and connected devices of congestion so that they can slow down their transmission rates until the congestion is resolved.

Using ECN with PFC can improve performance by allowing endpoints to adjust their transmission rates before a PFC pause is required.

DCB and RoCE Network Requirements

PFC is required for both RoCEv1 and RoCEv2, and IP ECN is required for RoCEv2.

Each protocol can be summarized in the table below.

| Feature | RoCEv1 | RoCEv2 | Note |

|---|---|---|---|

| PFC | Yes | Yes | Always use If not used, RDMA benefits are lost in congestion. |

| ETS | Yes | Yes | Must be used in a converged environment If you do not use the lossless traffic class, you may run out of bandwidth. |

| DCBx | Yes | Yes | Not required, but recommended |

| QCN | Yes | No | Although not required, it is recommended for multi-hop L2 RoCEv1. CN helps to solve Pause Unfairness and Victim Flow issues |

| IP ECN | No | Yes | Highly recommended for L3 RoCEv2 ECN helps solve PAUSE unfairness and victim flow problems. |

While PFC acts as a fast-acting mechanism to resolve microbursts,

CN helps smooth traffic flow and reduces downtime storms under normal load.

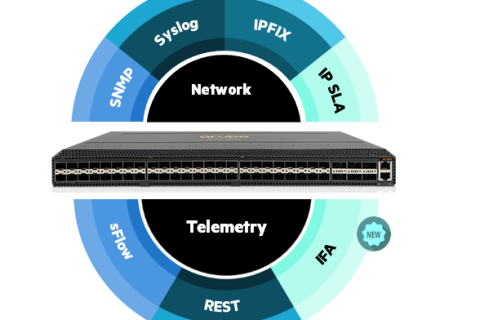

Storage Network Protocol Support by AOS-CX Switch

The data center product line of AOS-CX switches all support the DCB protocol as shown in the table below.

The number of lossless pools per port varies for each product, but you can see that switches from AOS-CX version 10.12 onwards support all storage network protocols, including RoCEv1 and v2.

In this post, we looked at the theoretical part of what RoCE is before configuring it.

Next, we'll look at how to configure RoCE on a real AOS-CX switch.